I wrote a piece for RayWenderlich.com about Collection Data Structures in Swift, and I did a whole lot of performance testing around it that didn’t have room to make it into that piece. I’m going to be going into waaaaay more detail and burying you in charts in this blog post if you want to dive deeper.

The short version is included in that piece: Creating collection data structures in Swift is a hell of a lot faster if you use Foundation classes rather than native Swift classes, but searching through them and manipulating them is either even or faster using Swift native classes.

Big-O Notation Refresher

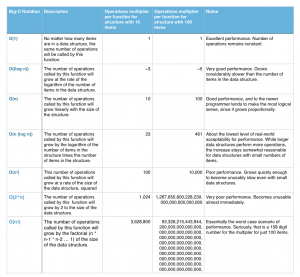

Big-O notation is a computer science concept that defines the worst-case-scenario performance of any function which operates on any kind of data structure. I’ll be referring to performance degradation of these collections using Big-O notation, so here’s a quick refresher on how it works:

When you see Big-O notation written as O(n), O is the notation for the number of operations performed by a function, and n is the number of items in the data structure the function is being performed upon.

The most commonly seen types of Big-O notation are as follows, and are ordered from best to worst performance:

If you’re a more visual learner, an interactive chart showing the rate at which each of these functions will grow visually is available here.

Notes On The Tests I Ran

I compared two primary types of data structures for this post: Foundation data structures, which are available in both Swift and Objective-C (and are generally written in Objective-C or C) and Swift-Only data structures which are written in, well, Swift.

- I wrote a custom application for testing all of this. The application is available here in a version compatible with Xcode 6.1/Swift 1.1.

- HOWEVER, all of the testing referenced in this post was performed a couple months ago while I was writing the post for Wenderlich on Xcode 6 Beta 5, using an iPhone 5 running iOS 8. A different amount of RAM, a different-speed processor, an updated (ie, current) version of Swift, a different version of iOS, an updated version of Xcode, or any number of other factors can cause your results to vary.

- For both Foundation and Swift classes, I used a single type of object (or single type of key and single type of value for Dictionaries). I wanted to make sure I was comparing apples to apples by having everything be the same class, as Swift generally requires in its typed collection classes.

- If you take a look at the

Profilerclass in the application, you’ll see the fairly simple code that measures how long each closure, or block of code, passed in to the profiler takes to execute. One thing the more advanced of you will note: The Profiler is not usingdispatch_benchmark, since that would require some bridging to Objective-C to access, and for my purposes, I wanted these tests to stay purely in Swift. - Everything was run through a single function in base test class,

performFunctionInBackgroundThenLog. This method takes two parameters: A readable name of the function being tested, and a closure testing it. Once the test is done running, the average of the 10 runs as recorded by the Profiler will be logged out to the console. - Everything is executed on a background thread, since I wanted to avoid running into any UI calculations.

- The biggest reason these tests are important is that they are internally consistent – every set of tests was executed on the same iPhone 5, running the same operating system, using the same version of Swift, and using the same version of Xcode.

- I ran one set of tests with optimization on and one set with optimization off. The difference was staggering: A single 10-run pass would take over three *hours* for optimization off, and less than 3 minutes with optimization on.

- Because of this, I ran five sets of tests with optimization off, so the numbers are averages of 50 runs, but only had time when pulling this data together to run it twice with optimization on (so those numbers are an average of 20 runs). I wish I’d had time to run dozens and dozens more tests, but without a better way to compile the data, it would have taken me ages.

- I haven’t rerun these tests on iOS 8.1 or Xcode 6.1, because to be honest, I haven’t had time.

I’ll note that when I was posting complaining about the difference between optimized and unoptimized, I had an interesting back-and-forth with one of the Xcode engineers on whether performance testing with optimization on is a good idea:

@designatednerd XCTest performance testing XCTest is not designed for benchmarking. It’s designed to catch perf regressions @isaacgreenspan

— Joar Wingfors (@joar_at_work) September 16, 2014

@designatednerd To catch regressions relative change matter, absolute values not so much. Use it to lock in known good perf @isaacgreenspan

— Joar Wingfors (@joar_at_work) September 16, 2014

@designatednerd Get a faster machine to run your tests! (No, I get it -that is a problem!) — Joar Wingfors (@joar_at_work) September 16, 2014

I’ll stand by my assertion that if the raw tests are taking 3 hours vs. 3 minutes, something’s screwy.

Anyway, or each of these data structures, you’ll see the performance comparisons for:

- 5000 vs. 25000 items in an array or set, 1000 vs 5000 items in a dictionary

- Optimizer on vs. Optimizer Off

- Swift

Arrayperformance vsNSMutableArrayPerformance - Big-O performance degradation

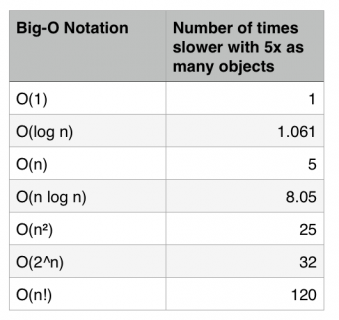

Since the number of items is increasing by 5 times, the relative speed with which each operation increases in terms of Big-O notation would follow the following chart:

NOTE (added 10/4/2014 2:20pm): Ryan Govestes pointed out that O(n) is technically referring to the number of functions called changes, not the rate at which the time it takes to call functions is changing. This is certainly a key distinction, but in a situation where we can’t see what functions are being called, the practical implications of how long it actually takes to perform the functions are a reasonable way to approximate this.

So how did Swift and Foundation classes actually perform? It’s time for the parade of charts!

Arrays

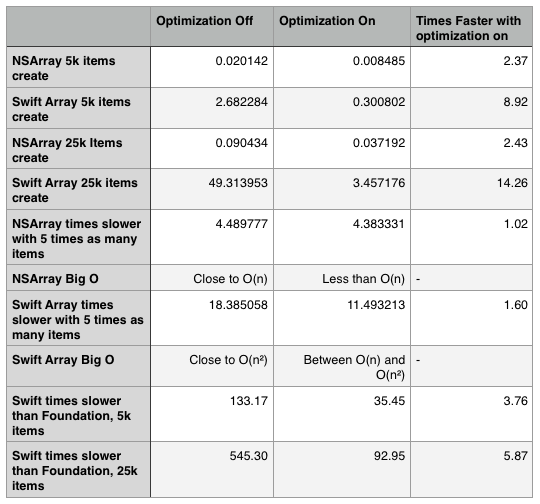

First up, creating an array:

Clearly, turning on performance optimization is very, very helpful in Swift Array creation.

Swift Array creation still has pretty poor performance compared to NSArray creation even with optimization on. Going above O(n log n) means that the performance is degrading very quickly.

You can also see from these numbers that point from which the numbers are increasing is considerably lower when optimization is on for Swift – therefore Swift Arrays will remain reasonably useful at considerably larger sizes with optimization on than when optimization is off.

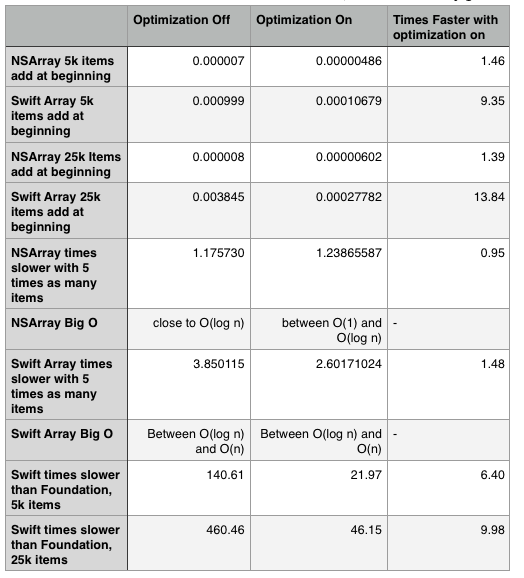

But really, most often, you only create an array once. It’s always the most computationally expensive part of what you need to do. What about adding an object?

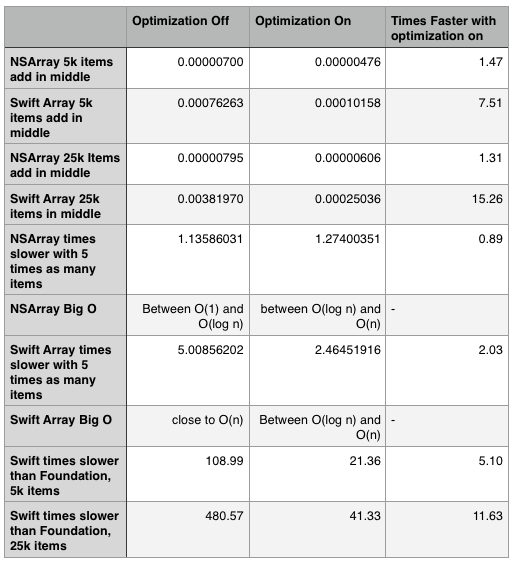

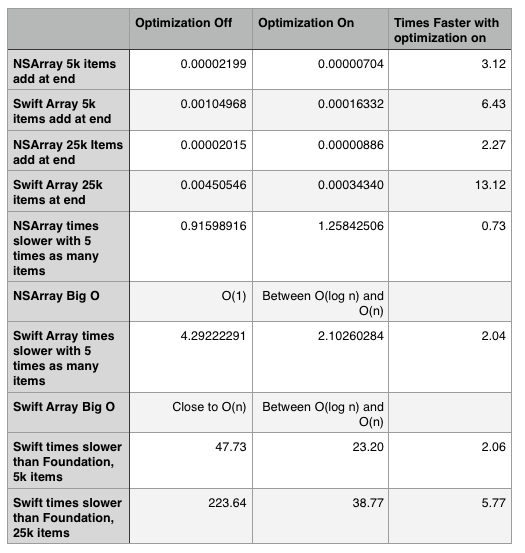

With optimization on, Swift Arrays actually grow at considerably lower rate than NSMutableArray when inserting items at the beginning of an array, and never get worse than O(n) no matter where the insertion occurs.

NSMutableArrays are still at least 23 times faster than Swift Arrays, but even in the worst case scenario, the insertion time has dropped from hundredths to thousandths of a second for Swift Arrays.

Swift Arrays are still somewhat slower than NSMutableArrays, but the difference goes from millionths of seconds to thousandths – if you’re not working with a very large number of items, this performance difference is not going to affect your application.

One odd thing to note: NSMutableArray performance adding an object at the beginning of an array actually got worse with optimization on. There’s not really a good way to know why without knowing about the internals of NSMutableArray optimization, but it’s certainly interesting to note that turning on optimization doesn’t always make things faster.

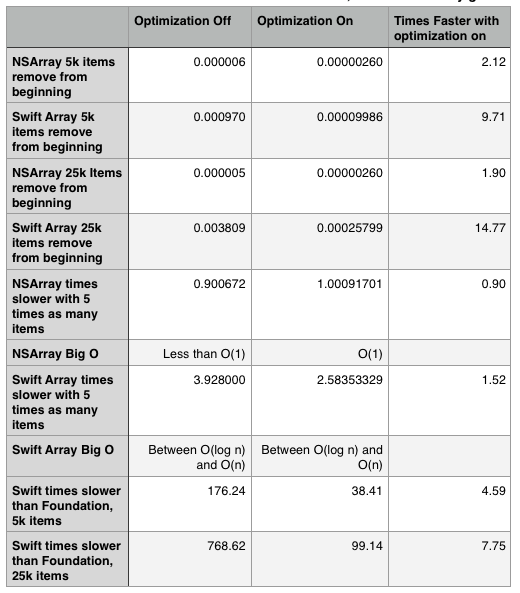

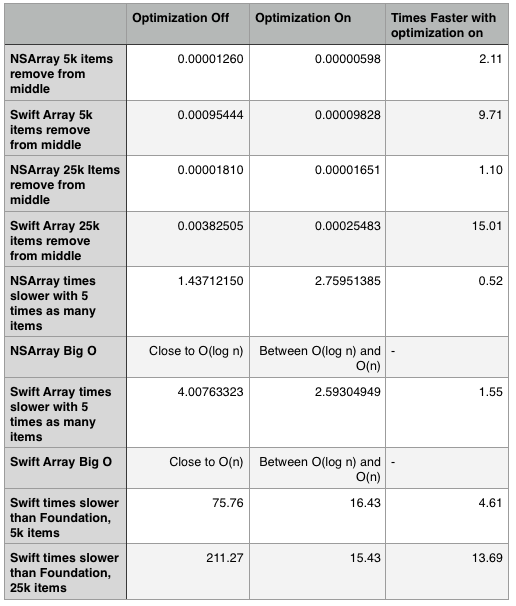

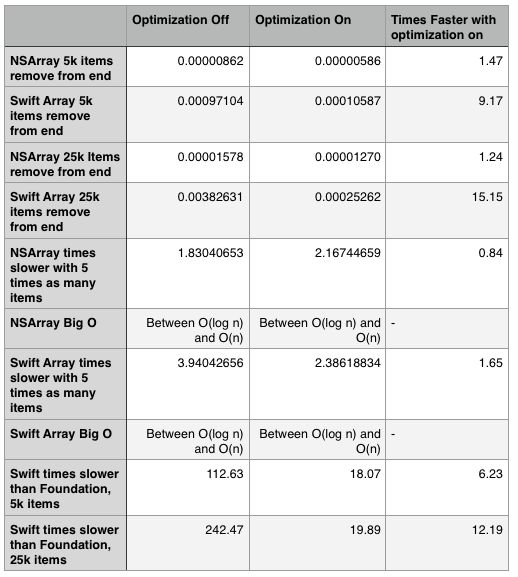

Next, take a look at the performance of removing items:

As one might expect, the numbers for removing an item are fairly similar to the numbers for adding an item.

Optimization again makes a tremendous difference, but you can see that even with optimization on, removal takes longer with Swift than it does with an NSArray, and the performance degradation in Swift is considerably more obvious.

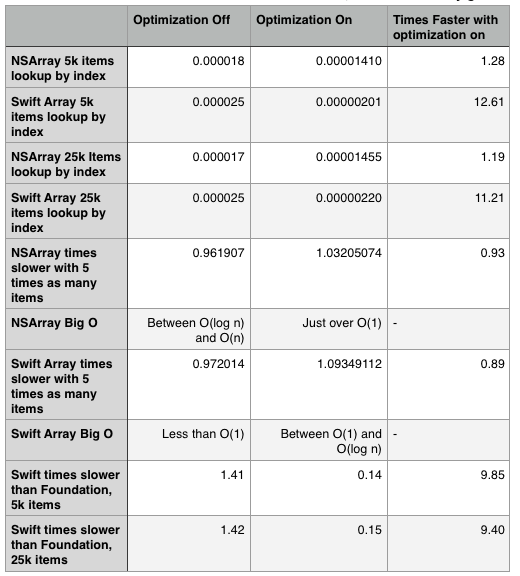

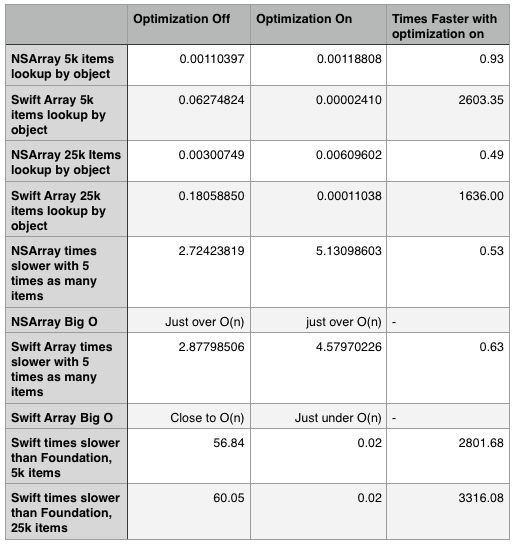

Next, look at the performance of looking up specific objects or indexes in an array:

Aha! Here’s where Swift earns its name, even without the assistance of optimization. This explains some of why Jesse Squires found that Swift Arrays are faster at sorting than NSArrays as of Xcode 6 Beta 5. With bigger arrays, the Swift Array's performance is actually 50 times faster than NSMutableArray's for looking up by object.

Swift Arrays' type safety certainly helps a lot for lookup by object, which operates without the overhead of having an array which could contain any object. NSArray has to first determine the type of each object, then figure out if it is equal to the object you’re seeking.

Dictionaries

Why did I use fewer items in the dictionary tests in the array tests? Firstly, Dictionary creation in both Swift Dictionaries and NSDictionaries is considerably more expensive than NSMutableArray creation – if you compare the numbers for each, you’ll see that they’re similar or worse with 1/5th the number of items.

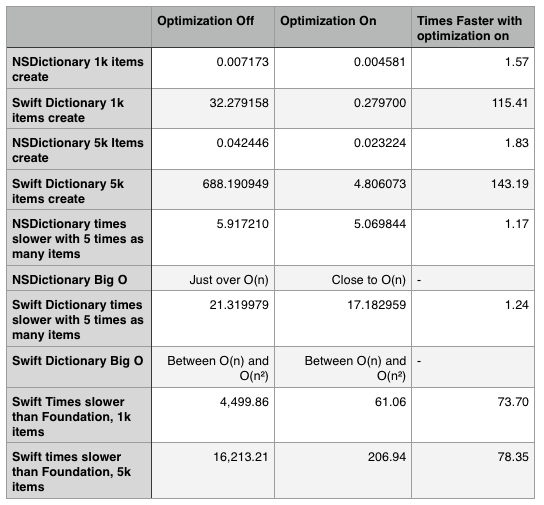

Secondly, Swift Dictionary creation with optimization off is particularly terrifying:

Every time the 5000-item dictionary test suite was run with the optimization off, it would take an hour and 45 minutes to run just the Swift Dictionary creation test. To get 5 runs of all of the dictionary test cases with optimization off took a very, very long time.

A 5,000 item Swift Dictionary took over sixteen thousand times longer to create than its NSMutableDictionary counterpart with optimization turned off.

With optimization on, a 5,000 item Swift Dictionary took only(!) 206 times longer than 5,000 item NSMutableDictionary took.

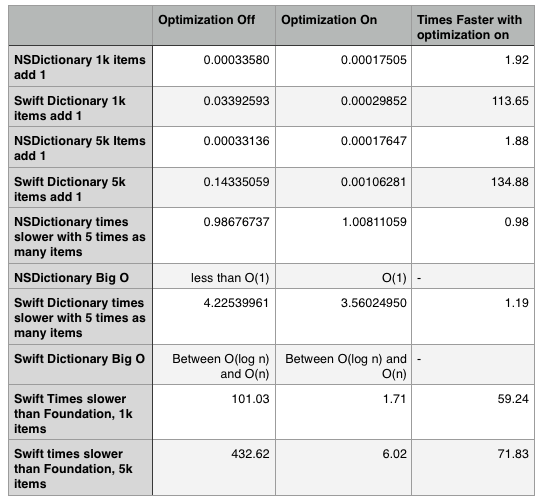

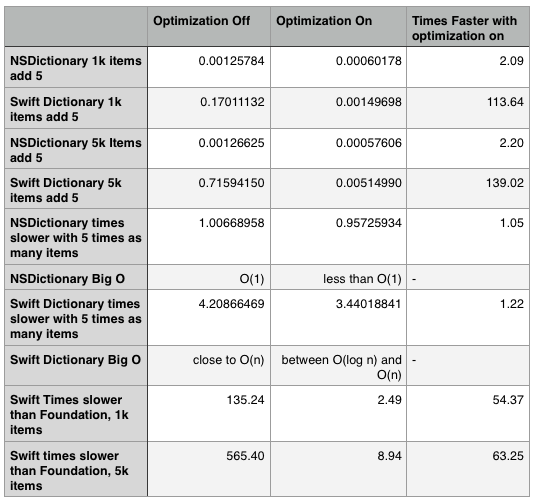

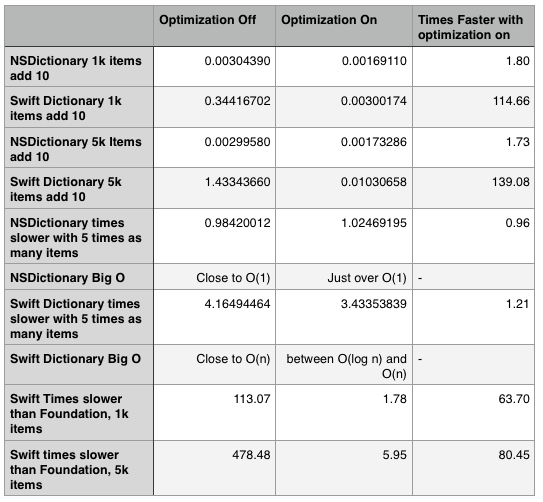

Since dictionaries store objects without any particular order, you can only test adding or removing a given number of objects to them. Here are the results for adding 1, 5, and 10 dictionaries:

Adding objects to an NSMutableDictionary is very, very efficient – it’s close to or less than O(1), which means that no matter how big your NSMutableDictionary gets, the time it will take to add a new item will be close to constant.

Swift Dictionary performance is (surprise!) an awful lot better with optimization on. But having optimization on also makes it easy to see how quickly the comparative performance degrades even when below O(n).

Swift Dictionaries‘ numbers against NSMutableDictionary are certainly a lot closer, but even bumping up from 1,000 to 5,000 items, you still see a major increase in the difference between the two.

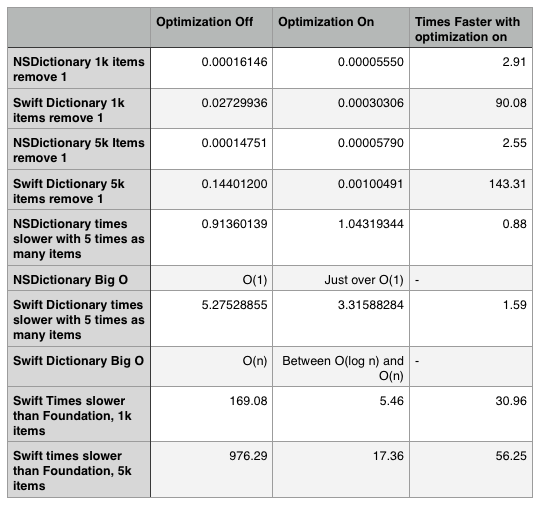

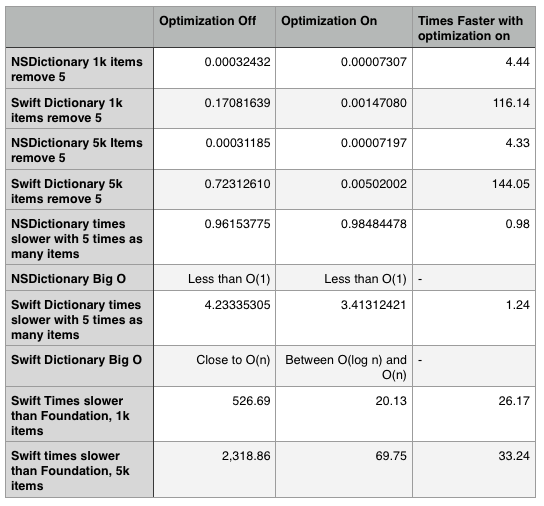

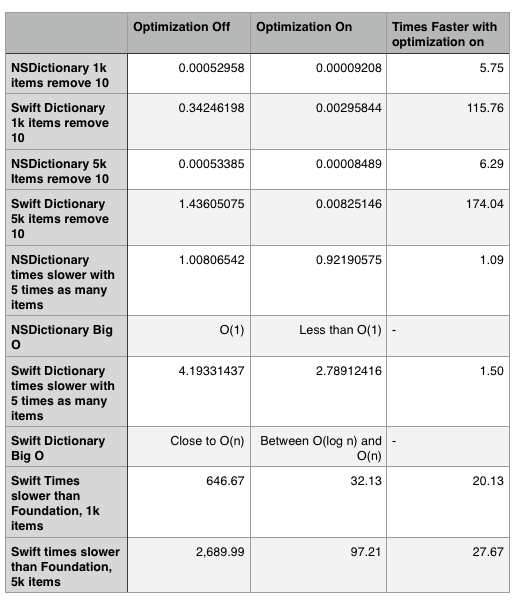

Now, the results for removing 1, 5, and 10 dictionaries:

Again, NSDictionary is never more than O(log n) times slower with more items, which for dictionaries of this size is very close to O(1). This is exceptionally strong performance.

Swift Dictionaries‘ performance isn’t as close when removing an object as it is to NSMutableDictionaries‘ when adding an object, but the rate of increase is fairly similar.

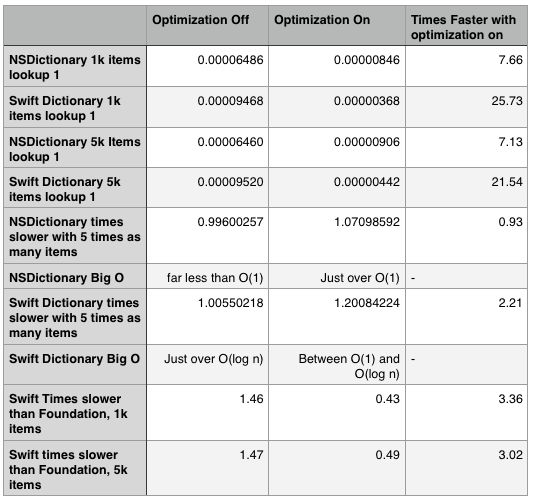

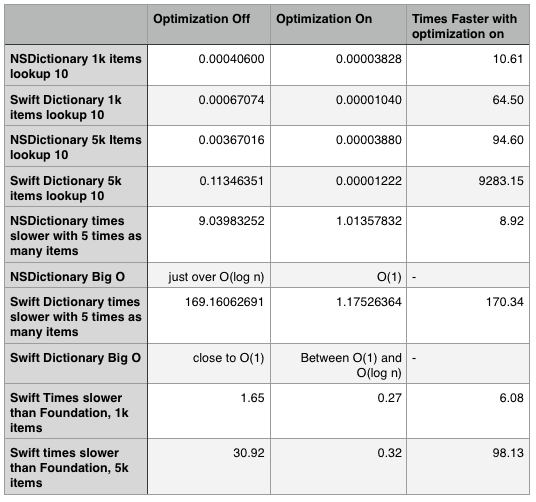

In dictionaries, you use the value of the key to look up an object – there is not a straightforward way to look up a value without its key. Therefore, here the tests are looking up either 1 random object or a batch of 10 random objects:

Once again: Swift is…swifter, even with the optimization off.

The performance doesn’t improve quite as dramatically as it has from NSMutableArrays to Swift Arrays, but being more than three times as fast at a 5000 item size is still a significant improvement.

Sets

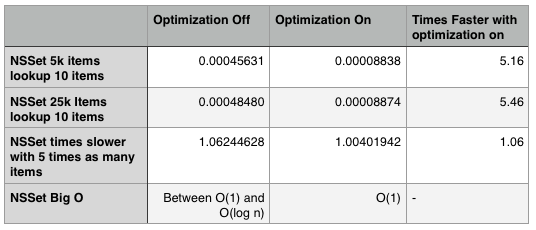

Sets are also the one major type of data structure in iOS that does not (yet) have a Swift-only equivalent to its Foundation API. Therefore, I only looked at NSSet.

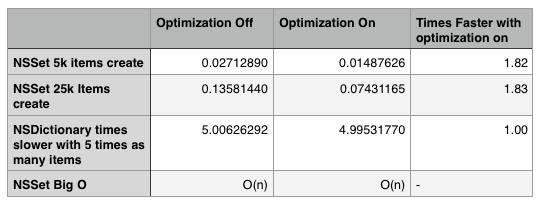

Here are the results for creating 5,000 vs. 25,000 object sets:

NSMutableSet performance clearly improves more with optimization on than NSMutableArray and NSMutableDictionary, but the rate of degradation sits squarely near O(n) in both cases as the set gets larger.

If you’re looking for pure speed, the NSMutableSet isn’t great – if you compare the numbers here to the numbers for NSMutableArrays, set creation is considerably slower. But that’s the price you pay for needing to check if every single item in a data structure is unique.

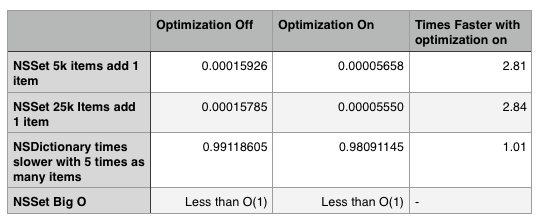

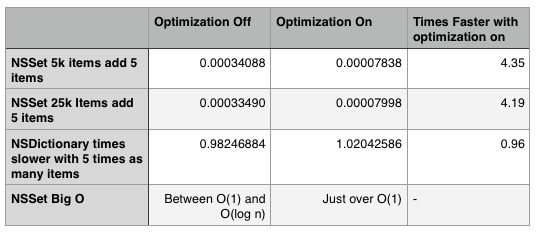

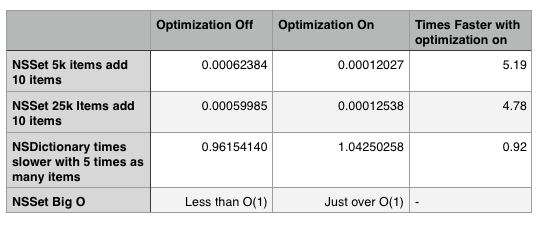

How about adding items? Here are the numbers for adding 1, 5, and 10 items:

You can see that while adding the same number of items increases at or near an O(1) level as you continue to increase the number of items in the set, adding more items continues to grow at closer to an O(n) rate.

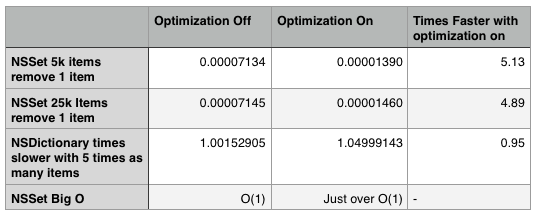

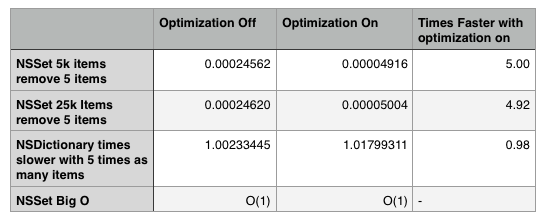

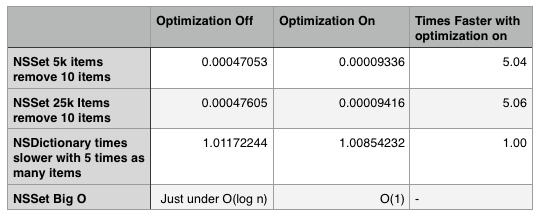

Here are the numbers for removing one, 1, 5, and 10 items:

Removing an item from an NSMutableSet is considerably faster than adding an item – after all, no check as to whether the item is unique is required to remove it.

As with adding items, while the numbers for removing the same number of items from a set of any size stay in the general vicinity of O(1), removing more items from a set of the same size increases closer to O(n).

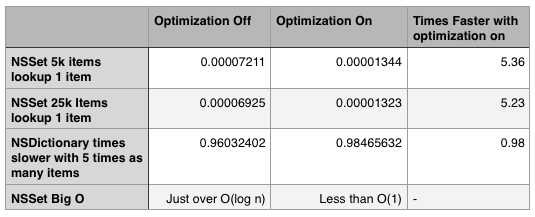

And here are the numbers for looking up 1 and 10 items:

NSMutableSet lookup is slower than NSMutableArray lookup by object, but it is faster than looking up in NSMutableArray by object.

Conclusions

And again, with the caveat that I haven’t had time to rerun these tests against Xcode 6.1/Swift 1.1:

If you’re using Swift, if you simply need to create and store large arrays or dictionaries without doing much lookup work, you should create them using Foundation classes, because it will be considerably faster.

If you need to do a lot of lookup work, you should balance the performance hit in creation vs. the performance improvement in lookup.

Raw Data

Here is a .zip of the hideous .numbers spreadsheet I used to calculate all of this, and here is a .zip of the Raw Console Output if you really want to go through everything.

I encourage you to run the (optimized) tests on iOS 8.1 and see what’s changed – I really do wish I’d had time to do that, but I really just haven’t had the opportunity.

Got more questions, want to tell me why my measurements are BS, or want to further dissect these numbers? Please feel free to leave a comment

Pingback: Looking Into Data Structures in SwiftVokal Interactive | Vokal Interactive

Pingback: Arrays + Dictionaries + Sets – Swift Bandit